Overview

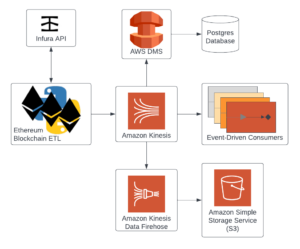

Sindri, a blockchain analytics startup looking for patterns among Ethereum transactions, needed help optimizing their data ingestion and data pipeline on AWS to drive accurate, comprehensive, and timely insights on market activity. This included adding comprehensive monitoring on top of enhancing their streaming data processing, planning for a performant data warehouse to back their analytics platform for consumers, and providing architectural advice on a wide range of topics from CI/CD pipelines to secure parametrization of databases and critical configuration settings supporting multiple pre-production development environments.

tech stack used

action plan

To support this small team with a limited budget, evolv within a 12-day engagement handled implementation of several business-critical processes for Sindri while creating a roadmap to unlock long-term success in a scalable and cost-effective manner with their AWS infrastructure. In the face of adverse events requiring disaster recovery, evolv helped the team rebuild their data and processes with focus on security.

Steps

1

Worked closely with Sindri’s engineers and key stakeholders to define the data sources, design the architecture, security structure, and the development of the cloud environment and enterprise data warehouse (EDW)

2

Executed on optimizing data ingestion for speed and security, building business-critical monitoring, and assisting with system outages that compromised database access

3

Provided documentation and engaged in discussions with the team regarding further optimizations to their development processes from business-critical ETL to security, disaster recovery, and CI/CD

Results

Optimized data ingestion

to leverage low-cost, parallel compute resources, ensuring real-time synchronization with the Ethereum blockchain and concurrent historical data backfilling.

Implemented a monitoring task

to detect system outages, data gaps, and failures, enabling engineers to receive alerts on data ingestion issues via a Discord bot.

Enhanced database security

by introducing role-based access for users and applications, prioritizing high write performance by configuring parameters based on critical source data aspects.